Consider an agent in an environment at a time t, the current state is S(t), R(t) is the reward received by the agent to reach in the state S(t) . For proceeding to the next state it should take an action, A(t) is the action taken by the agent and as a result of this the agent receives a reward of R(t+1) and attains a new state S(t+1)

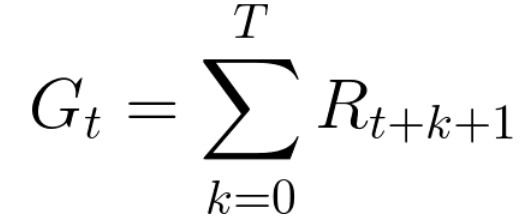

The above process continues until we reach the target.As we discussed in the previous article , the main aim of the agent is to maximize the reward.The cumulative reward at a given time is the sum of all possible expected rewards in the future and can be written as

Gt = Rt+1 + Rt+2 + Rt+3 . . . .

And it can be written as,

But adding the rewards like this doesn't make any sense.Sometimes we don't care about future rewards ,sometimes we care about future rewards .So we can modify the above equation as follows,

And this equals to,

As the equation suggests we are multiplying the equation with gamma to the exponent of time step. Gamma is called the discount factor in which value of gamma lies in [0,1] . This means that we are discounting the future rewards. As the time steps proceeds the relevance of future rewards decreases.Note that the above equation doesn't mean that future rewards are not relevant, it is taken in that way.

Let's analyse how the gamma value affect the cumulative reward and the agent.

If the gamma value is larger then the agent focusing on long term rewards and if the gamma value is smaller discounted rate will be more ,then the agent will more focusing on immediate reward.

Approaches to Reinforcement Learning

The main 3 approaches of Reinforcement learning are Value based, Policy based and Model based approach.

Value Based Approach

We know that value of each state is the sum of total expected future rewards starting from that state. The agent uses this value function to select the next state and it is the state with high value.

Policy Based Approach

In this approach we are choosing the optimal policy that achieves maximum future expected reward.There are two types of policy . One is deterministic policy ,which choose an already determined action all the time and the other is Stochastic policy , which chose the output in a probabilistic manner.The agent learns a policy function to act in the state.

Model Based Approach

In this approach we are creating agent for each environment and the agent learns to perform in that specific environment.

Tasks in reinforcement learning

There are two reinforcement learning tasks Episodic and Continuous task :-

Episodic task

In this if an agent start from a particular starting point as the time proceeds it will stop.

For example, if an agent plays chess game there will be starting point and ending point, ending point is where the agent win ,loose or draw the game .

Continuous Task

In this the agent continue his interaction with the environment until we decide to stop it.

For example , the agent in stock market prediction.

Exploration and Exploitation

At the initial condition the agent doesn't have the full information about the environment. So the agent first explore the environment for learning about the environment, it tries to explore new actions instead of doing the already explored actions. This is called Exploration.

After a certain interval of time, when the agent explored almost all the state, the agent starts to exploit the already explored actions. This is called Exploitation

This is all about taking suitable actions at times , interacting with the environment, learning from it, getting rewards and maximizing them to take better actions. Reinforcement might be something new for you, but it can we very interesting once you get ideas .Do not forget to read our previous post on RL.

Keep Reading !!!

0 Comments